Haber

14 Şubat 2022

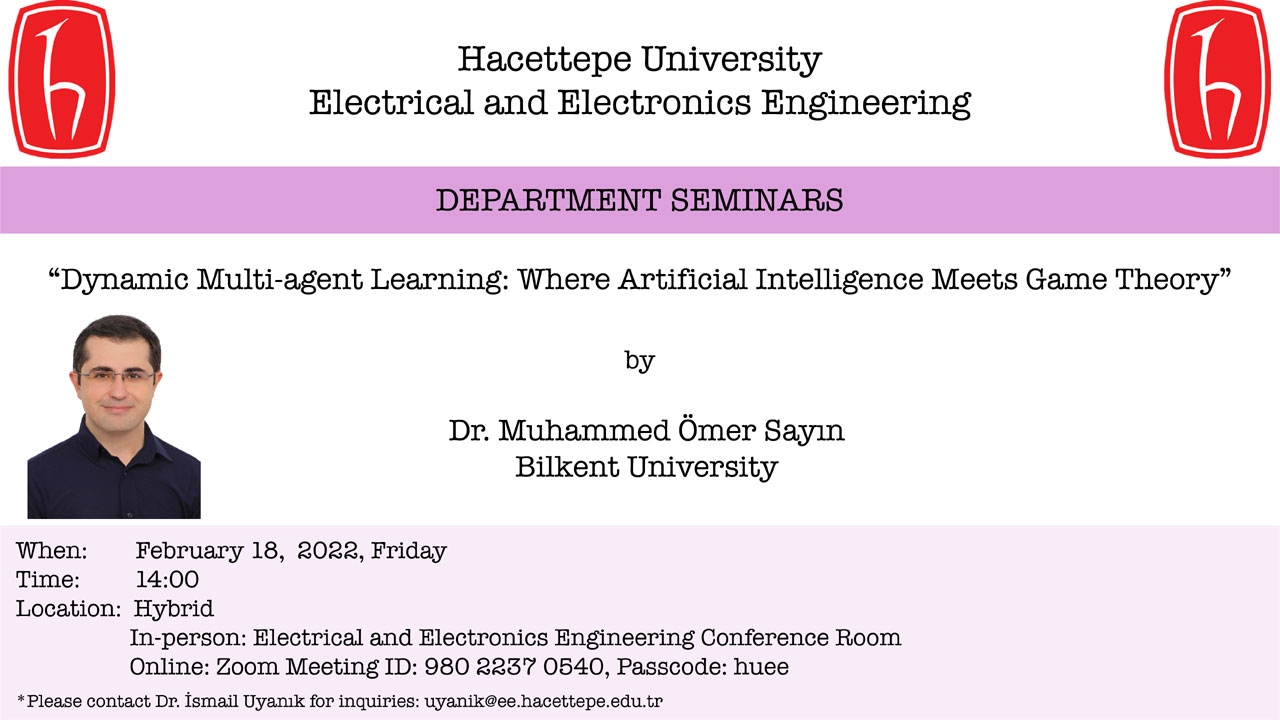

Hacettepe Üniversitesi Elektrik ve Elektronik Mühendisliği Bölümü tarafından düzenlenen Bölüm Seminerleri kapsamında bu haftaki seminerimiz Bilkent Üniversitesi'nden Dr. Muhammed Ömer Sayın tarafından gerçekleştirilecektir. Seminer başlığı: "Dynamic Multi-agent Learning: Where Artificial Intelligence Meets Game Theory".

Seminer Konferans Salonu'nda gerçekleşecek ve aynı anda Zoom üzerinden canlı olarak da yayınlanacaktır.

Tüm ilgilenenleri seminerimize davet ederiz.

Sorularınız için:

Dr. İsmail Uyanık, uyanik@ee.hacettepe.edu.tr

ÖZET

Reinforcement learning (RL) has recently achieved tremendous successes in many artificial intelligence applications. Many of the forefront applications of RL involve multiple agents, e.g., playing chess and Go games, autonomous driving, and robotics. Unfortunately, the framework upon which classical RL build is inappropriate for multi-agent learning, as it assumes an agent’s environment is stationary and does not take into account adaptivity of other agents. Therefore, Markov games (also known as stochastic games) are an ideal model for (decentralized) multi-agent reinforcement learning applications by modeling the interactions among human-like intelligent decision-makers in dynamic environments.

In this talk, I present a simple and independent learning dynamics for Markov games. It is a novel variant of fictitious play combining the classical fictitious play and Q-learning within two-timescale learning scheme. There has been limited progress on developing independent learning dynamics with systematic convergence guarantees. I will show that the presented dynamics converge almost surely to an equilibrium in certain but important classes of games such as zero-sum and (single-controller) identical-payoff Markov games. The results could also be generalized to the cases where players do not know the model of the environment, do not observe opponent actions and can use heterogenous learning rates. I will also discuss my future research agenda directed towards developing theoretical and algorithmic foundation of learning and autonomy in complex, dynamic and multi-agent systems.

KONUŞMACININ BİYOGRAFİSİ

Muhammed Omer Sayin is an Assistant Professor at Bilkent University, Department of Electrical and Electronics Engineering since September 2021. He was a Postdoctoral Associate at the Laboratory for Information and Decision Systems (LIDS), Massachusetts Institute of Technology (MIT), and advised by Asuman Ozdaglar from September 2019 to August 2021. He got his Ph.D. from the University of Illinois at Urbana-Champaign (UIUC) in December 2019. His advisor was Tamer Başar. During his Ph.D., he had two research internships in Toyota InfoTech Labs, Mountain View, CA. He got his M.S. and B.S. from Bilkent University, Turkey, respectively, in 2015 and 2013. The overarching theme of his research is to develop the theoretical and algorithmic foundation of learning and autonomy in complex, dynamic and multi-agent systems.

Tüm ilgilenenleri seminerimize davet ederiz.

Seminer Tarihi: 18 Şubat 2022 Cuma

Seminer Saati: 14:00

Seminer Yeri: HÜ EEMB Konferans Salonu ve Zoom

Zoom kodu ve şifresi görseldedir.

Sorularınız için:

Dr. İsmail Uyanık, uyanik@ee.hacettepe.edu.tr

ÖZET

Reinforcement learning (RL) has recently achieved tremendous successes in many artificial intelligence applications. Many of the forefront applications of RL involve multiple agents, e.g., playing chess and Go games, autonomous driving, and robotics. Unfortunately, the framework upon which classical RL build is inappropriate for multi-agent learning, as it assumes an agent’s environment is stationary and does not take into account adaptivity of other agents. Therefore, Markov games (also known as stochastic games) are an ideal model for (decentralized) multi-agent reinforcement learning applications by modeling the interactions among human-like intelligent decision-makers in dynamic environments.

In this talk, I present a simple and independent learning dynamics for Markov games. It is a novel variant of fictitious play combining the classical fictitious play and Q-learning within two-timescale learning scheme. There has been limited progress on developing independent learning dynamics with systematic convergence guarantees. I will show that the presented dynamics converge almost surely to an equilibrium in certain but important classes of games such as zero-sum and (single-controller) identical-payoff Markov games. The results could also be generalized to the cases where players do not know the model of the environment, do not observe opponent actions and can use heterogenous learning rates. I will also discuss my future research agenda directed towards developing theoretical and algorithmic foundation of learning and autonomy in complex, dynamic and multi-agent systems.

KONUŞMACININ BİYOGRAFİSİ

Muhammed Omer Sayin is an Assistant Professor at Bilkent University, Department of Electrical and Electronics Engineering since September 2021. He was a Postdoctoral Associate at the Laboratory for Information and Decision Systems (LIDS), Massachusetts Institute of Technology (MIT), and advised by Asuman Ozdaglar from September 2019 to August 2021. He got his Ph.D. from the University of Illinois at Urbana-Champaign (UIUC) in December 2019. His advisor was Tamer Başar. During his Ph.D., he had two research internships in Toyota InfoTech Labs, Mountain View, CA. He got his M.S. and B.S. from Bilkent University, Turkey, respectively, in 2015 and 2013. The overarching theme of his research is to develop the theoretical and algorithmic foundation of learning and autonomy in complex, dynamic and multi-agent systems.

Diğer haberler